While searching for some other information on Dart performance I accidentally tripped across Nikolay Botev’s blog post on the Dart benchmarks against the JVM, JavaScript, and C . It is part of a suite of benchmarks that the Dart language team has created, here is the GitHub project for the benchmark repository . The one he concentrated on was the DeltaBlue algorithm benchmark. The DeltaBlue benchmark is originally a SmallTalk constraint solving problem documented in the January 1990 issue of “Communications of the ACM” paper “The DeltaBlue Algorithm: An Incremental Constraint Hierarchy Solver” by Bjorn N. Freeman-Benson and John Maloney. The version he implemented here is an intentionally crude transliteration of the original SmallTalk code into Dart, JavaScript, and C. It’s been 7 years since he wrote that blog post and the performance page on the Dart language page is long gone. I thought it’d be interesting to see how the performance of the different engines has evolved. There are some interesting results and one interesting performance paradox I want to explore more.

Setup

As stated above I started with the now relatively long in the tooth benchmark code that Botev was using 9 years ago. He was benchmarking against the then-current versions of the Java JDK, GCC, V8 JavaScript engine, and Dart runtime. To reproduce the results I created a VM that instead had the latest environments for each of those. For today’s run that means it’s using the OpenJDK 17.0.1, GCC 9.3, V8 9.9, and Dart 2.15. OpenJDK and GCC were pulled down from the Ubuntu APT repositories. V8 and Dart were built from source on the machines following the instructions. Unlike in his tests all of these were done with 64-bit mode. When he wrote it ten years ago there was some contention about whether to run it in 32 or 64 bit mode. In the present day that is moot.

Along with the runtime environments there was the question of the code itself. In the intervening years Dart added null safe capabilities. While it is possible to get the old code running in present Dart by putting a comment at the top limiting the Dart version to the pre-null safe days I wanted to actually upgrade the code for null safety as well to see if that impacted performance. Likewise the JavaScript code as originally written was pre-ECMAScript 6 (ES6). ES6 is where real syntactic classes with things like inheritance were introduced. I wanted to have a version of the code that was written in modern ES6 class syntax rather than some of the home rolled things they did to get class inheritance in the pre-ES6 days. Lastly the Dart runtime supports on demand running as well as ahead of time compilation. I wanted to compare the relative performance of those two modes in the same way he compared the performance with unoptimized and optimized C code.

Methodology

I wanted to compare the original code to more modern idiomatic code in the respective runtime environments. I therefore have a version of the code with minimal changes to get operating in the modern runtimes. I also have a second implementation which updates the syntax to the modern languages. Below the conclusion section you can read about some of those details. The script itself runs each of the program configurations with the iteration count set to 10, 100, 10,000, and 100,000. That script is run ten times and the results are averaged together. The benchmarks were run on an Intel i7-6700K workstation running Linux Mint 20. During the benchmark runs no other applications or major resource hungry processes were running.

Results

The numbers output by the script fall into four categories:

- Loop time (measured in milliseconds): which measures how long it took to do the core algorithm divide by the number of iterations

- Run time (measured in seconds): which measures the entire lifespan of the process from the time the time it is created to the time it exits

- Max Memory (measured in KB): The maximum amount of memory that the process took according to the operating system

- Average CPU Usage (measured in % of one core): The average CPU usage of the process over its lifespan, which

timecomputes as a ratio of the process time to the run time.

I’m only going to concentrate on the first three since in the longer steady state case all of the cases essentially converge to using most or all of one core. In terms of the various different configurations that were tested they are:

- The Java implementation running against OpenJDK 17

- The C implementation compiled without optimizations

- The C implementation compiled with full optimization

- The original JavaScript code running on V8

- The ES6 updated JavaScript code running on V8

- The original Dart code running in the Dart runtime

- The updated null safety modified Dart code running in the Dart runtime

- The updated null safety modified Dart code compiled to an executable and then executed.

While the data for all of those is available in this OpenDocument spreadsheet {:target=_blank}. Because the original and ES6 JavaScript code turned in the same results within statistical bounds the graphs only have one JavaScript bar. The same is true for the Null safety upgrade code and the original code. Their data is still in the tables for those interested.

Loop Time

The metric that Botev zeroed in on, and the one which is most important for longer running processes, is how fast an algorithm can run on a warmed up process. For compiled applications written in C/C++ there isn’t as much startup sluggishness, but there is some. It is a dramatically different story for runtimes like the JVM, V8, and the Dart runtime. This is because those runtimes have a “Just-In-Time” (JIT) compiler that can optimize the low level code based on how the code is executing at runtime. This is in comparison to static compilers which do that by just looking at the source code and optimizing based on that. There are pluses and minuses of each. Obviously the warm up problem is a downside to JIT compilation for very fast code. However JIT can in some cases produce faster code than static compilation, as we are going to see here. Isolating the core of the algorithm helps us see this behavior in the benchmark. By increasing the iteration count from 10 to 100,000 we can see how long it takes for a runtime to reach a “steady state” iteration performance and what that performance will be. Compiled applications again don’t have as much warm-up issues as those running with a Just-In-Time (JIT) compiler but there can be some loop overhead that quickly dissipates.

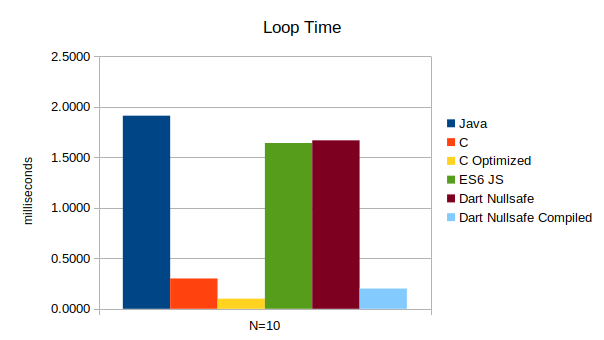

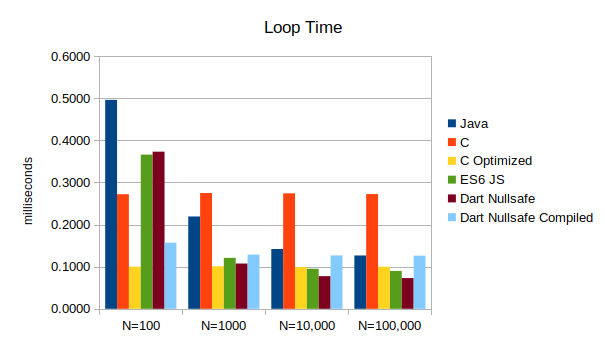

With so few iterations it really helps out the statically compiled cases: the two C cases and the AOT-compiled Dart case. The Java, V8 JavaScript case and Dart runtime cases are dramatically slower. However the Dart runtime and the JavaScript runtimes are performing essentially the same. As the number of iterations starts climbing though the performance of the JIT compiled languages dramatically improves.

By the time we get to 1000 iterations all but the Java and unoptimized C code are performing comparably. As we continue increasing the iteration count something interesting happens between the JavaScript and Dart cases. The JavaScript and Dart runtime cases start performing better than the optimized C code. The Dart runtime case is even edging out the JavaScript V8 engine as well! At the same time though the AOT compiled Dart code has had pretty flat performance. That was to its advantage earlier on but as the iteration count continues it starts being a performance laggard, only beating out the unoptimized C and Java tests.

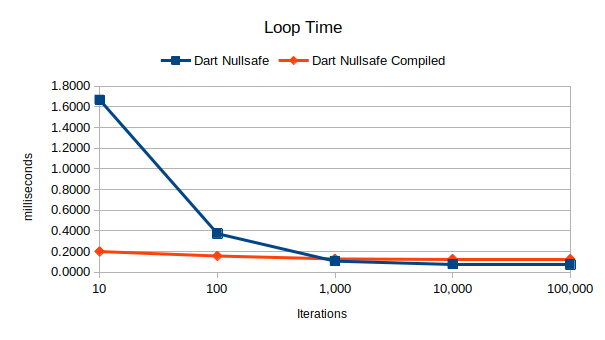

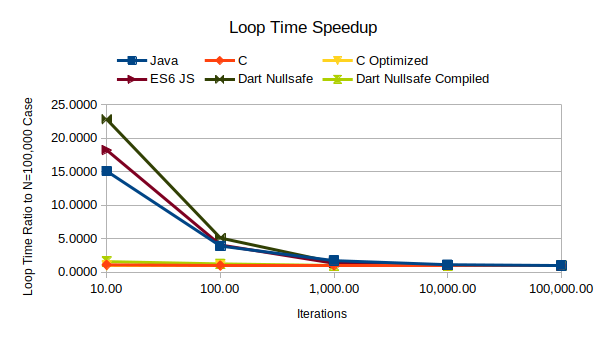

If we look at the speed up of the two cases we can see that while the runtime Dart case had a dramatic speedup as N went from 10 to 100 to 1000 iterations, the compiled case only did so marginally. We expect that to a certain, again because of warm-up issues with JIT. It is interesting though that the JIT case did continue to have marginal improvements in loop performance. The same graph of all the languages shows how this is very much a static versus JIT compiled system performance. My question is if it is possible to get a JIT into the binary. I believe the answer is a “no” either due to deployment policies of target platforms or for other technical reasons like reducing binary size. Because the optimized loop is the entire program it definitely has an effect on overall runtime performance in the higher iteration count.

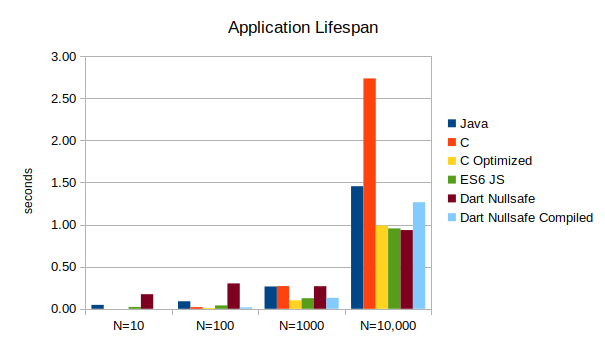

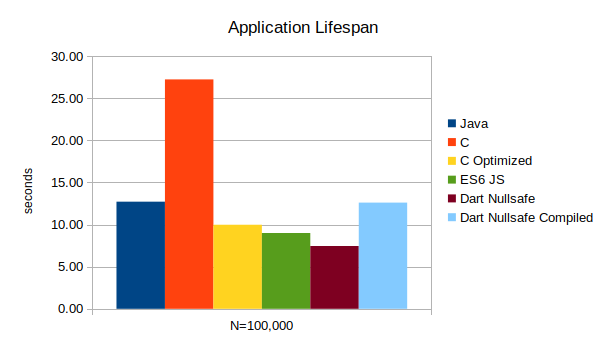

Runtime

The application lifespan is literally from the instant it is kicked off until it finally shuts down and returns back to the command prompt. For the smaller cases it will be dominated by any startup time or shutdown time overhead. For C and compiled Dart this is far smaller than it is for the V8 engine, Dart engine, or the Java VM (JVM).

As we can see the smaller test cases are all almost instantaneously starting/stopping. However looking deeper at the metrics there is still a dramatically longer process for the Dart runtime cases. The C cases and compiled Dart cases come in at measurably zero seconds. The V8 engine comes in at 0.02 seconds. Java is nearly twice that at 0.05 seconds. Sadly Dart runtime is six times worse than even that at 0.28 seconds. Even if you remove the one time this intermittent shutdown delay bug happens it’d still clock in at 0.18 seconds. As the larger cases come into play the startup overhead falls into the noise however.

By the time we get to the larger iteration count the extra loop performance actually made the Dart runtime case the fastest of them all, which it held through the end. In the case of the compiled Dart code it holds its own even with the C cases until crossing the N=1000 iteration count. After that its substantially slower loop time makes it the second slowest, only barely besting the JVM.

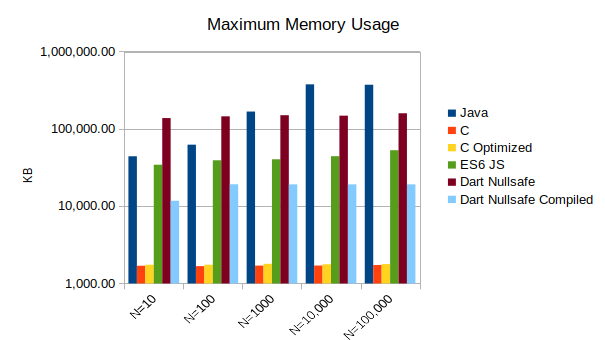

Memory

Besides actual runtime performance there is also the consideration of how much memory it takes to run a process. I previously documented in this post the dramatic difference in Dart runtime versus compiled memory footprints . How does it look for a real application and compared against the JVM, V8, and C code?

First, it is no surprise that C used the least of all of the languages. It has no memory manager, large runtime, VM overhead, etc. For the other languages there are some interesting trends. The only language which saw dramatic increases in memory usage as the number of cases increased was the JVM. It’s literally using more than 8x as much memory in the N=100,000 case compared to the N=10 case. This could be driven by lack of garbage collection with the tight loop. The other runtimes though don’t suffer this problem. Comparing Dart to the V8 engine we can see that the compiled Dart code is far more svelte than the corresponding V8 or JVM versions. However the Dart runtime version is by far the most memory hungry of them all for the shorter runs. It’s not until the higher counts that Java starts using more resources.

Conclusion

What conclusions can we make from this one brief benchmark? First, from a performance perspective Dart can certainly hold its own against any language. Whether in compiled or JIT form the Dart runtime turns in numbers comparable to the leading server side architectures: JVM and JavaScript. In this one benchmark it often turned in better performance than both V8 and C itself. If someone was concerned about Dart’s performance I think that should definitely put it to bed. That’s consistent with the results nine years ago but it has actually gained some ground compared to its rivals since then even as they have improved.

Another conclusion, which is actually more of a question, is that whether to run compiled Dart or runtime Dart code isn’t so straight forward. I had always assumed that compiled Dart code was the way to go. From a memory usage perspective and startup perspective it is a no brainer on that front. The relative overhead is so dramatically better for compiled Dart code. However the fact that in this case the JIT was able to dramatically improve on performance over time means that for certain applications there may be an advantage to using the runtime version instead. There are actually several ways to deploy code which range from the just passing dart files to the runtime to compiling them into intermediary code to the totally self contained executable. I’d be curious to explore if they’ve tried putting a JIT into the self contained executable version and/or what the impediments to that are.

Appendix: Code Details

I took the original benchmark code and made minimal changes to get it compiling and running in the modern engines. The update version of the code can be found in this GitHub fork I’ve created

. There were no needed changes for the C code. For the Dart code I added the line // @dart=2.10 to the top of the file which forces the Dart runtime to use the language as of 2.10 version. For the JavaScript version I had to fix a typo that was in the original version which prevented compilation/execution. I then created two new versions of those files. For the DeltaBlue.dart file there is now also a DeltaBlueNullSafe.dart which makes tweaks to the modern null safe syntax. Null safety is the developer saying they understand that there will be null handling in their particular code and what to do about it. The Dart Language site has a page on sound null safety

to describe that in detail. It’s relatively small tweaks though. In the case of the JavaScript the changes to get into ES6 class syntax were much more dramatic.

Just to give a small example lets look at the EditConstraint class. The whole algorithm is a constraint analysis problem. There are several different types of constraints. So there is a base class Constraint which all other constraints extend. In the old pre-ES6 code the EditConstraint looked like:

function EditConstraint(v, str) {

EditConstraint.superConstructor.call(this, v, str);

}

EditConstraint.inheritsFrom(UnaryConstraint);

/**

* Edits indicate that a variable is to be changed by imperative code.

*/

EditConstraint.prototype.isInput = function () {

return true;

}

EditConstraint.prototype.execute = function () {

// Edit constraints do nothing

}

This is how one “faked out” classes and inheritance in JavaScript back in the day. You have a function with the name of the class type you’d use. That acts as the constructor. Any methods were added using the prototype properties which you add function handles to. There was no concept of inheritance so at the top of the original code there was the inheritsFrom method which added things like the superclasses’ constructor etc. These are all very rudimentary object oriented programming concepts that with ES6 became actual language features. The deltablue_es6.js version is the conversion to the modern more “normal” syntax:

class EditConstraint extends UnaryConstraint {

constructor(v, str) {

super(v, str);

}

/**

* Edits indicate that a variable is to be changed by imperative code.

*/

isInput() {

return true;

}

execute() {

// Edit constraints do nothing

}

}

2021-12-22

in

2021-12-22

in

12 min read

12 min read