Yesterday’s post on Server Side Dart REST Framework performance really started from what I’m documenting today: figuring out if Go was really much faster than Dart for REST service work. I’m taking time in the month of February to try to learn Go. I came up with the idea of making a little E-Book REST service which takes an epub book from Project Gutenberg and re-hosts it as a series of chapters in simplified text complete with linking between chapters, table of contents, etc. Unfortunately the only epub reader library for Go doesn’t work well. I did find a good one for Dart though! I then began thinking about writing the whole thing in Dart, or at least the epub processor. That would defeat the purpose of the project in the first place but it did get me asking myself the question, “Is Go really going to be any faster than Dart for this sort of thing?” And thus this whole project began. The previous post detailed the various layers of Dart performance. This is looking at Dart vs. the built in Go HTTP server library. The code and data can be found in this Gitlab repo .

Introduction

As I said this started off originally as a simple “What is the relative performance of the built in HTTP Server in Dart compared to Go?” Unfortunately things started getting a little bit more complicated. As I wrote in the Dart article, there is a shift in the Dart world from the original now “discontinued” HTTP Server and a new one called Shelf. The only problem was I was getting some pretty big differences in performance and the newer system was worse than the old one. As we will see the performance was also pretty far off Go’s performance. I therefore decided to see if one of the other Dart frameworks did much better. This was especially true on the scaling with multiple processors aspect since by default the Go server does and in Dart only one, Conduit , truly supports it. The discovery of the wrk tool came from a simple internet search trying to see if someone had done this sort of analysis at all and/or recently. I found this article which did a smaller version of what I did here.

Goals and Methodology

The main purposes of this study are to determine:

- Is there a substantial difference in the performance of Go vs. Dart for serving REST service

- The maximum throughput of a REST server in Go and Dart

- How well either system scale to use the resources of a multiprocessor system

- Any failure modes with a server under stress

The main points of the technique used to determine this are:

- Write a simple REST server in each platform with one route to the root path

/ - At run time one of two versions of the REST service will be injected. One version produces a static string

Hello World!which will be served back. The second will dynamically build a string based on the current time formatted as an ISO/RFC string. - Using wrk HTTP benchmarking tool for stress testing each server and generate statistics about the response over a given interval (for the current data set a 60 second run)

- Work is configured to simulate 1000 users using 4 system threads and execute against the root endpoint for 60 seconds total.

- To make the process as clean as possible the wrk tool and Dart servers were run on their own separate machine instances in Digital Ocean. Both instances were hosted out of the same data center with communications being done through their public IP addresses. The stimulation machine had 8 dedicated vCPUs. The server machine had 8 dedicated vCPUs for most tests but for scaling tests had up to 40 dedicated vCPUs. The tests never saturated the CPU or memory of the driver machine.

- The code implementation for each is attempted to be as similar as possible to give no one implementation an advantage. Below is the code snippet for the Go code. The previous Dart article has the code for each of the Dart implementations

Go Implementation

if staticString {

fmt.Println("with static response")

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Fprintln(w, "Hello World!")

})

} else {

fmt.Println("with dynamic response")

http.HandleFunc("/", func(w http.ResponseWriter, r *http.Request) {

fmt.Fprintln(w, "The time is: "+time.Now().Format(time.RFC3339))

})

}

http.ListenAndServe(":8080", nil)

Because the Go server by default will use every core on the system that is available in order to create a head to head comparison with the mostly single core enabled Dart code it is looks for a command line argument to throw it into single core mode and sets the runtime’s maximum number of processes to one using:

if singleCore {

runtime.GOMAXPROCS(1)

}

Results

Along with the below results description you can find the raw results and spreadsheet in the project’s Gitlab repo . Let’s first take a look at the throughput numbers. Throughput was measured over regular intervals of the execution and reported out with statistical information at the end of the run. We therefore have the average, standard deviation, and maximum throughput of each of our configurations.

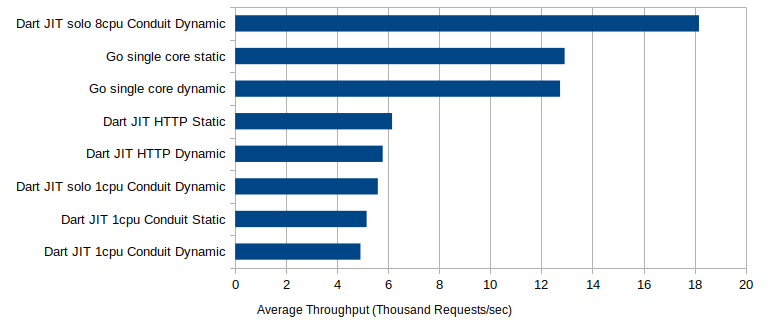

First, the graphs have been simplified someone to show just the best performing Dart servers. For this first graph we are also only looking at single core Go performance. As we can see like with the Dart case there is a small difference between the static and dynamic case. The difference is comparable and is really not worth discussing further. The big difference is between the Dart and the Go performance. While the Dart single core servers max out around 5000-6000 requests per second the Go server is clocking in at almost 13,000 requests per second. That’s a 2-2.5 times performance difference between the two. If we let the Conduit Dart server use all the CPUs on the 8 core server it can finally overtake the Go server, bringing over 18,000 requests per second. But as soon as we allow the servers to use more than one core we should unencumber the Go server as well to see how its multi-core performance comes in:

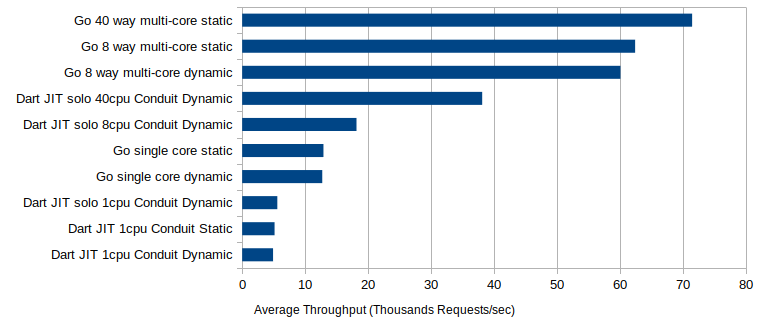

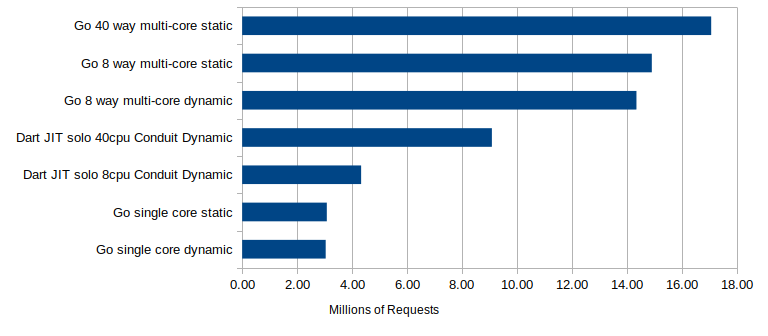

This graph is even more stark than the single processor case. When we allow the Go server to also use all eight cores it brings in 60,000-62,000 requests per second. That is 3.3 times as much throughput as the Conduit server achieved with eight cores. When we scale up to the 40 core server we see that even with 40 cores the Conduit server could not achieve the same performance as the 8-core Go server. At the same time throwing 40 cores at the Go server did increase throughput to 71,500 requests per second, a 15% increase. This isn’t a huge increase. When we had seen the fact Conduit was only using about 10-11 cores of the 40 core server I attributed that to perhaps the network saturating. Seeing how much more throughput the Go server was able to achieve I’m not buying the network argument for the Conduit serever only using 10 cores. However I am potentially buying it for the 40 core server. Under this loading scenario the Go server was using between 13 and 17 of the 40 cores. Again it is obviously getting throttled somewhere else. Meanwhile the Driver CPU load was still maxing out at 3 cores, despite being given up to 4 threads to work with. By comparison the driver server was only using 1.25-1.5 cores against Conduit. I therefore believe if networking resources may be artificially limiting the 40 core performance but we can see that the throughput of Go compared to Dart is very impressive. What about latencies?

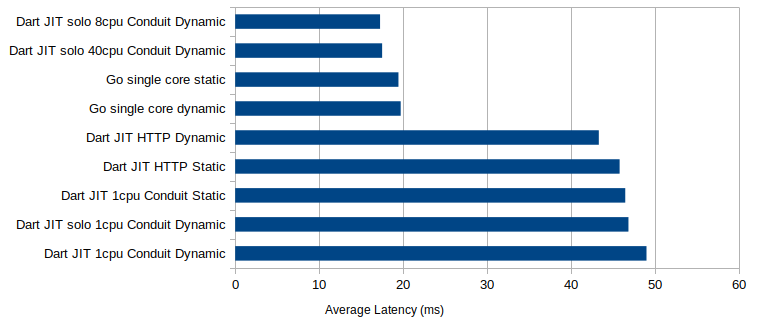

Again the difference is pretty stark. While the single core Dart runtimes are getting legacies of at best 45 milliseconds the Go runtime is less than 20 milliseconds. By bringing up Conduit in multi-core mode it is possible for it to marginally beat Go but again if we are going to compare multi-core performance we should unencumber Go as well:

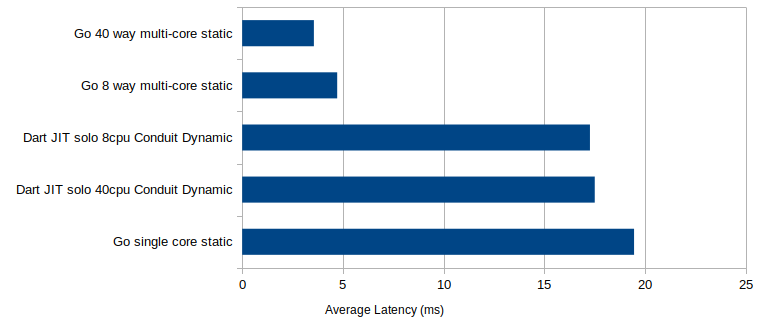

Just like with Dart letting the Go runtime use multiple cores dramatically improved the latency as well. Latencies dropped from just under 20 milliseconds down to 3.5 to 4.75 milliseconds. This blows away the best Dart latencies in multi-core mode of just over 17 milliseconds. All of this leads to stark total requests numbers as well:

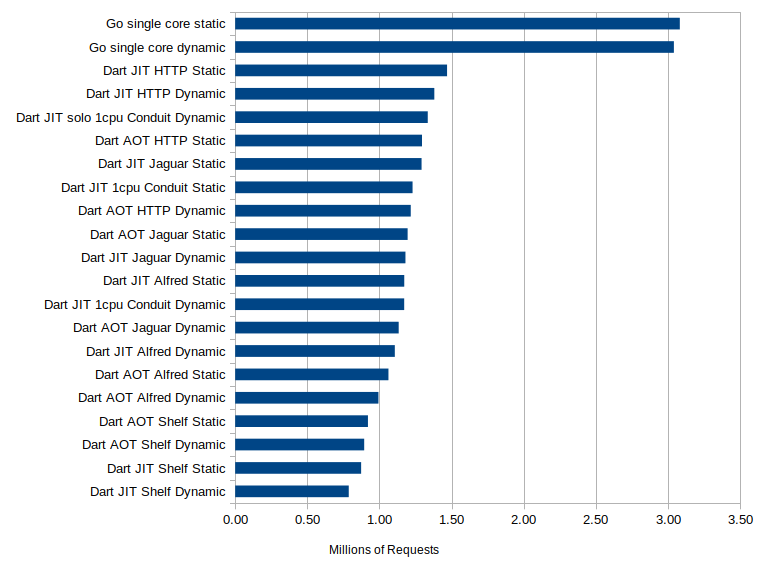

For this graph I decided to throw in all the Dart cases. As we can see the total number of requests serviced by Go is between 2 and almost 4 times as much, at just over 3 million requests serviced compared to 790,000 to 1.47 million for the Dart engines. Ironically the old “discontinued” http_server had pretty much the best performance while the new “standard” server had the worst. It doesn’t get any better for Dart with the multiprocessor cases:

The Go server on the eight core machine was 3.3 times as fast as the corresponding Conduit server, pumping out 14.3 million requests to Conduit’s 4.3 million. The gap closes a bit on the forty core machine where Conduit manages to get up to just over 9 million requests to Go’s just over 17 million, but as stated previously I believe we were reaching network saturation at that point.

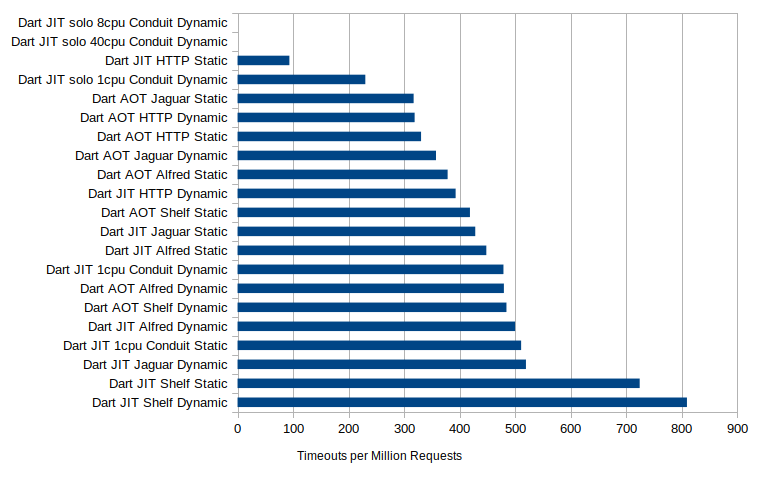

In the Dart study I mentioned the number of requests that timed out per test case. Below is the graph showing that for the various Dart servers. I’m not updating it with Go data because across all the cases, even the single CPU ones, the Go server had no timeouts. Based on the other performance metrics it makes sense.

Along with the overall magnitudes Go showed much better stability in its statistics as well. Lets look at the latency statistics. While the single core Dart latencies were larger their standard deviations compared to their average was larger as well. Average latencies across them were about 50 milliseconds but their standard deviations were also 50 milliseconds as well and their 99% latency value (meaning only 1% of cases were larger than this) was almost 70 milliseconds. Go single core on the other hand clocked in at about a 19 millisecond latency but their standard deviation was just 4 milliseconds and their 99% interval value was about 27 milliseconds, all much tighter. The multi-core cases were even worse. While yes the average latency for the Dart server got down to about 17 milliseconds the standard deviation only halved or went up and the 99% interval exploded to 100-228 milliseconds. The multi-core Go latencies also dropped and while their standard deviation and latency statistics stayed about the same in the 8 core case it plummeted in the 40 core case.

Conclusion

To be crass, the Go built in HTTP server easily bested all of the current tested Dart servers in this test and it wasn’t even close. Worse, the new built-in Dart server is by far the worst performer of the lot. The Go server also allowed for far more scaling in a far easier way than the Dart server did along with having far better single core performance. Does this mean one can’t/shouldn’t use Dart for their server? That is beyond the scope of this study. Most people’s services aren’t going to have a sustained loading of thousands of requests per second. Therefore the much better Go performance is noteworthy but not a raw performance issue. Similar performance issues may or may not appear deeper in the stack, the ORM, database access, etc. The available libraries, developer team familiarity with the language etc. all need to factor in as well. In the same way one would choose Ruby on Rails over Ejpress JS on Node for various very valid reasons despite the performance advantage of Node so too one could make that calculus here. In fact if one were comparing Conduit (the fork of Aqueduct) to Ruby on Rails and Sinatra in these benchmarks it is still much higher performing.

2022-02-12

in

2022-02-12

in

10 min read

10 min read