The year was 2005. The news of the father of Linux, Linus Torvalds, rage quitting his source code management system (SCM), BitKeeper, spread widely because he said he was stopping all his Linux development until he made a new SCM system that would be better than all others. Could he pull off another genius move like he did with Linux again? Would it in practice be just for his use or would it be the new defacto standard that would revolutionize how we did source code management?

We now know that he did hit another home run with his SCM system, called Git. It is the defacto standard for SCMs around the world. It is no exaggeration to say that it revolutionized the scalability of development and has transformed the way we work with SCM systems. We forget how much harder it was to imagine this endpoint back then though. Tripping across this presentation he gave on Git in 2007 at Google really brought all those memories forward for me though.

Git at this point was barely two years old. It had not seen wide adoption but it was gaining some buzz. It wasn’t the first distributed SCM system but it was promising to fix problems with all SCM systems including the existing distributed ones. At the same time the idea of a distributed SCM system was pretty unfamiliar to people. Most of us were used to centralized server-centric SCM systems. I myself had my first experience with SCMs with the now defunct Perforce system at my first job. When I started my own company in 2005 and couldn’t afford the $1000 a year starting cost I switched over to using another popular alternative that was free called SVN. There was also the grand-daddy CVS, which got every deserved ounce of scorn from Linus in this speech, and a whole host of other proprietary solutions. Almost all of these were server based SCMs though. People who didn’t develop back then may picture “server centric” and get pictures of GitHub or GitLab but it was a dramatically different experience.

The way we use our SCM software now we make our changes and check-ins on our computers to our heart’s content and then we push and pull changes from “the server”. In these classic systems the process of making commits, or seeing what the differences were, were done with direct communication to the server. You wanted to see what changed with your file compared to what it was originally? You asked the server that question. You wanted to see the history of the changes to the file? Again had to ask the server for that. For small teams connected over high speed local networks this wasn’t too bad. As soon as you had very large teams dispersed over the country however things became very problematic. Server needs grew substantially. Network needs were a much bigger problem because it was much more expensive to technologically impossible to scale your network to fix it. Some places just can’t get high speed networking at any cost to boot. The system quickly could grind to to a halt. This is the strength of distributed SCM systems in general. When you do a git commit or git diff you are just working locally and only synchronize “to the server” when you are done. This means you can work on your own code on an airplane or a coffee shop, or anywhere you want and didn’t have to wait until you got on “a real network” to finally commit all your work. Distributed SCMs are what make that possible. However that wasn’t the most dramatic change yet.

In the olden days branching and merging were hard. Just this last week I was thinking back to a time when a new engineer had the idea of having every small feature being done in its own branch and then merged on completion. We were an SVN shop at the time. Today that is how all development is done, thanks to Git, but on SVN in the 2010 era that sort of workflow would be a nightmare. Branching is easy enough. It was the merging that was crazy hard. Linus brutally addresses this in his talk. In CVS branching and merging was excruciating. The SVN team prided itself on fixing the branching part. What they left as a huge gap though was the merging process. As Linus points out, it was the merging process that needed the most fixing not the branching. When I was thinking back on that circa 2010 episode I was wondering if perhaps I was just not being generous enough with the suggestion. With these refreshed visions though it is more we have all gotten spoiled by how easily Git made merging.

In the presentation Linus talked about doing merges at least 5 times a day across thousands of files. It is the Linux kernel after all. It is tying together lots of people editing thousands of files. That sort of merge workflow to someone who used Perforce or SVN seemed astonishing. As Linus pointed out in Perforce or SVN it would actually be impossible. To people at the time, and in that room at Google even, it also seemed like an performance metric of little relevance to normal projects because most people don’t need to merge that often. Linus addressed that by saying that making certain things substantially easier changes behaviors. In fact he was 100% right about that.

Today we do merges so often we don’t even think about them anymore. Do you ever “pull down code from the server”? You just did a merge, albeit in the back end. Did you accept a pull request from a “feature branch” into a base branch? That was a more obvious merge. Did you push your code to the server? That too was a merge. It’s merges all the way down actually. The entire distributed SCM workflow wouldn’t work if merging wasn’t seamless so it was more than just a nice to have. It was a requirement. It was one that was done so well that merges don’t seem so scary anymore.

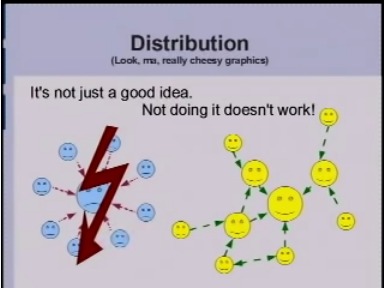

Watching the Google engineers try to grok how Git worked and made sense probably seems odd to present day viewers who didn’t live through the era. But imagine explaining to a mid-1980s person a smartphone when they had barely seen computers used for daily tasks and the idea of a global high speed network connecting all devices was more fantastical sounding than what they saw on Star Trek: The Next Generation. It was so radically different that it was hard not to see more pitfalls than salvations, especially since what we were doing was working well enough 80% of the time. Part of the problem was the way the decentralization was presented. Here is Linus’s slide showing the “bad way” of traditional server based SCM to the “good way” distributed SCM of Git:

In this slide you see that all users on the left, all sad of course, talk to a central place, whereas the distributed way we have users large and small all directly communicating and it all “just works”. The first question that came up from our server-centric workflow brains was, “Then who has ’the’ latest gold standard version?” The answer of “no one and everyone” made absolutely no sense to any one in the room, or most of us at the time. There had to be one copy somewhere that we all agreed upon as the one true gold copy of the repository. The technological answer is, “No we don’t.” The human factors answer is, “just agree upon the place you want to put it and do that if you so choose.” And so choose we all did.

What made Git take over the SCM world? It wasn’t its insanely better merging and distributed nature. It existed for a long time, and BitKeeper before it five years longer than that, without really taking over everything. SVN did make merges suck but for most people doing most things it worked well enough. Explaining Git and distributed source code to newbies was a lot harder too, and still is even today, so “if it aint broke don’t fix it” was more often the mantra you’d have. The thing that moved the needle was GitHub, GitLab, etc. giving us a defacto turn key easy way to nominate the one copy of the repository a project collectively agree is “the gold copy”, create tools around how to manage that easily, and create ways for us to make that system approachable. In other words, those tools solved the human factors half of the problem after Git solved the technological one.

In fact my company’s own journey to Git had to deal with this particular issue. We were not 100% happy with SVN. It was working well enough in most cases but with the surge in acceptance in Git and Mercurial we knew we should be able to do better with a popular distributed SCM. We therefore started looking at how to move to either Mercurial or Git. The big thing we needed was a turnkey solution that let us host repositories on an internal server so that someone could pop on, sync up, and pop back off and go about their business. We weren’t worried about changing our issue tracking, wikis, or other things at the time. The primary workflow of making sure a clean central place to synchronize with was available was the stumbling block. At the time there was no real product offering that for Git. There were best practices for hosting it on a shared drive and things like that. All of that felt hackish when we experimented with it. Mercurial on the other hand did have a hosting option, so we ended up going with that. Years later when GitHub became pervasive, GitLab gave us the option to self host, and Atlassian supported Git in their tools for projects that required Atlassian suite systems we jumped from Mercurial over to Git.

So does this mean we are really just the blue saddy faces on the server side left image again after all? Not at all. Even though we have converged on an easy way to nominated places we consider to be where we keep our “gold copies” we still are doing fully decentralized SCM. We work just with our local cloned copies until it’s time to synchronize. We can do lots of little commits with no network access with no trouble at all. It’s even possible for us to add remote references to our own co-developers workstations for a group that wants to do their own collaboration off network if they so chose. Besides the PR conventions most projects require we don’t even need to use the central “gold copy” hosting service to do any of the work. Most importantly it is true that technologically no one copy is more special than another.

As an extreme example, let’s say by some stroke of bad luck your self-hosted GitLab or Gitea server crashed and died. The backups turned out not to work and the server is gone forever. In the old SVN days this is the point where you are toast. You would build a new SVN server and create an initial check in from the copy of the source code that existed on someone’s machine that everyone agreed was closest to the latest copy. But the rest of the history was gone forever. All the tags of major/minor versions of releases, the change history, etc. is gone. With Git and other distributed SCMs we all have all of that data. In the same scenario someone will stand up a new GitLab/Gitea server, you add a brand new project to it as a new remote endpoint, and do a git push. At that point everything is back to normal again. I can’t imagine having it the old way again.

The talk is a worthwhile watch from a historical retrospective of how far we’ve come with source code management in the last 15 years. We probably wouldn’t have gotten here without Git and/or Mercurial and the creation of platforms like GitLab, GitHub, etc. to solve the human factors aspects of usage of these tools for everyday users.

A Footnote on False Memories

When I first queued up this YouTube video I started flashing back to my attempts to play with Git when Linus first released it. It was in a much more unusable state at the time. Looking back I wasn’t sure how much of that was my own inexperience with it, my brain being stuck in too much Perforce workflow mindset, or me not giving it enough of a chance. Based on what Linus was saying in this speech it was probably a combination of all three.

As I recall after playing around with it for an afternoon trying to give it a shot on a simple test project. Even the simplest thing seemed so hard. I vividly remember sitting in my cube in tech support trying to get my brain wrapped around it. After the afternoon of mostly failures I gave up on dabbling with it for awhile. My then-current system, Perforce was working well enough, and I wasn’t in charge of making decisions about that at my company as a young engineer anyway.

I was trying to figure out the approximate year of this memory based on the image of the cubicle and computer I was trying this on in my mind. I have a pretty clear picture in my head of the 19" CRT and the cube location. Since the company I worked for at the time were constantly reconfiguring things and moving buildings it would narrow it down to at least a particular year. Based on all that I concluded that my experimentation with this was in the late-2000/early-2001 period. Here is where the false memories anecdote comes in.

As I said above the experience I’m vividly remembering in that location would have to have been happening around 2000/2001. The problem is Linus didn’t even start working on Git until 2005 and I probably wouldn’t have started touching it until 2006. At that point I had started my own company and we were in our first real office. That “vivid memory” of playing with Git in my little tech support cube was several years, several cube changes, five office building changes, and one job change off. Which means I know which office (I had my own office at that point yay!) and location I would have actually been conducting those experiments in. However, even knowing that now I can’t conjure up a picture in my mind where I was actually playing around with it there. The imagery my brain insists on “being real” is the one that snaps me back to that cubicle in 2000/2001. It is such a mindfuck.

2022-02-13

in

2022-02-13

in

12 min read

12 min read